Authors

- Olisemeka Nmarkwe

- Oluwaseun Doherty

Project Overview

This project systematically investigates the performance of various machine learning algorithms—including Artificial Neural Networks (ANN), Decision Trees, K-Nearest Neighbors (KNN), and Support Vector Machines (SVM)—for the task of EEG signal classification. The pipeline encompasses end-to-end engineering: from data ingestion and preprocessing, through model training with best practices (e.g., early stopping and hyperparameter selection), to multi-metric evaluation and feature-rich visualization. The work demonstrates a strong foundation in both ML algorithm design and the engineering workflows necessary for reproducible experimentation and deployment.

Key Features

- Automated EEG Data Preprocessing: Standardization, feature selection, and dimensionality reduction for robust input representations.

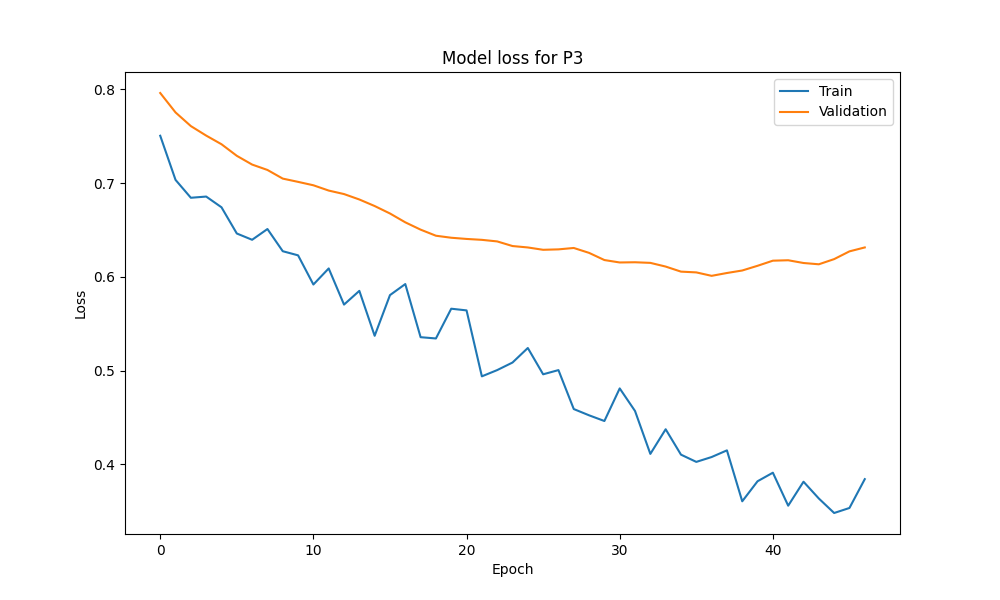

- ANN Engineering: Custom Keras/TensorFlow neural network with dropout regularization, Adam optimization, and early stopping callbacks.

- Classical ML Baselines: Comparative training and evaluation of Decision Tree, KNN, and SVM classifiers for benchmarking.

- Validation Strategies: Rigorous data splits and use of multiple test sets for unbiased performance estimation.

- Metric-Rich Evaluation: Calculation and reporting of accuracy, precision, recall, F1-score, and visualization with confusion matrices.

- Reproducible Results: Model persistence (saving/loading) across all architectures for consistent evaluation.

- Visualization: Automated generation of comparative performance plots and confusion matrices using Matplotlib and Seaborn.

Technologies Used

- Python

- TensorFlow/Keras (for neural network modeling and training)

- scikit-learn (for Decision Tree, KNN, SVM, and preprocessing)

- NumPy & Pandas (data manipulation and processing)

- Matplotlib & Seaborn (visualization)

- Pickle (model serialization)

Results

Experiments reveal that the engineered ANN delivers competitive or superior performance versus classical baselines, especially when leveraging advanced optimization (Adam) and regularization (dropout, early stopping). The model engineering approach ensures stable convergence and generalization, while rigorous metric tracking surfaces strengths and weaknesses of each algorithm across multiple EEG channels.

Future Work

Planned extensions include deeper neural architectures, ensembling strategies, and advanced signal processing for feature extraction. Further, hyperparameter optimization and explainable AI techniques could be integrated to refine both accuracy and interpretability, enhancing the overall ML engineering pipeline for EEG classification tasks.